Os projectos de software, como quaisquer outros, por vezes falham. Projectos de longa duração (anos) e de maior dimensão têm maior risco de serem cancelados. E é precisamente quando o prazo de conclusão se aproxima que a decisão acaba por se colocar em cima a mesa: vale a pena investir mais uma pipa de dinheiro num projecto que não vai estar concluído no prazo esperado ou para o qual as funcionalidades serão substancialmente diferentes daquelas que se havia projectado? Esse é o momento em que se faz mais um esforço ou em que se decide secar o sorvedouro. A título de exemplo, estatísticas mostram que os projectos com pleno sucesso (terminados no prazo e dentro do orçamento) andarão pela casa de apenas 30%.

Os projectos de software, como quaisquer outros, por vezes falham. Projectos de longa duração (anos) e de maior dimensão têm maior risco de serem cancelados. E é precisamente quando o prazo de conclusão se aproxima que a decisão acaba por se colocar em cima a mesa: vale a pena investir mais uma pipa de dinheiro num projecto que não vai estar concluído no prazo esperado ou para o qual as funcionalidades serão substancialmente diferentes daquelas que se havia projectado? Esse é o momento em que se faz mais um esforço ou em que se decide secar o sorvedouro. A título de exemplo, estatísticas mostram que os projectos com pleno sucesso (terminados no prazo e dentro do orçamento) andarão pela casa de apenas 30%.

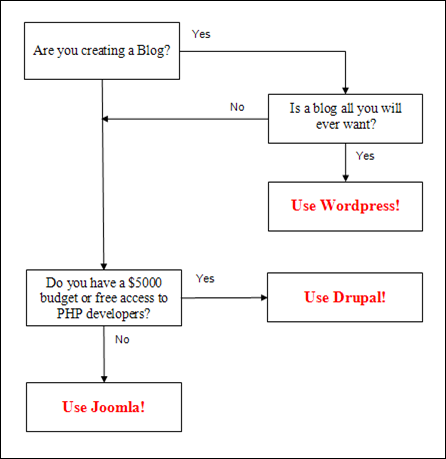

O artigo no fim deste texto, em inglês, é uma interessante dissertação sobre o tema. Entre os factores de falha apontados no artigo, estes são destacados:

- Objectivos irrealistas ou desarticulados para o projecto

- Inadequada estima dos recursos necessários

- Requisitos de sistema mal definidos

- Informação deficiente quanto ao estado do projecto

- Riscos não geridos

- Comunicação deficiente entre cliente, implementadores e utilizadores

- Uso de tecnologia imatura

- Incapacidade para gerir a complexidade do projecto

- Práticas de desenvolvimento desleixadas

- Deficiente gestão de projecto

- Parceiros de negócio políticos

- Pressões comerciais

Vem esta conversa a propósito do anuncio do governo em gastar

15 milhões de euros num projecto de software para o Ministério da Educação e em gastar outros 15 milhões na manutenção operacional deste software durante quatro anos.

15 milhões para desenvolver um projecto de software é uma pipa de dinheiro. Para se ter uma percepção, uma pesquisa no Google devolve alguns exemplos que permitem contextualizar esta grandeza (para simplificação, asumo 1€ = $1USD). Ver por exemplo

este,

este e

este. Ou visto ainda de outra forma, 15 milhões de euros daria para manter uma equipa de cerca 100 pessoas a trabalhar durante dois anos, com cada membro da equipa a "ganhar" 6000 € brutos (valor bruto para incluir impostos, despesas de funcionamento e lucro do negócio). É mesmo um negócio-lotaria.

Assim sendo, supõe-se que as melhores práticas tenham sido usadas para definir os objectivos do projecto, minimizando desde logo o primeiro dos riscos da lista anterior. Supõem-se igualmente que se tenha elaborado um bom caderno de encargos e que este tenha sido apresentado a concurso, para assim se obter a melhor solução.

Não. Nada disto foi feito. Pelo contrário, o governo decidiu gastar uma

página A4 do Diário da República em propaganda e optou por entregar um projecto desta dimensão por ajuste directo. Quais são os objectivos do projecto? Não se sabe. Apenas foi publicada uma descrição lacónica.

A questão aqui está em não ser possível saber se 15 milhões de euros é muito ou pouco para o projecto em causa. Pela simples razão de não se saber o que se pretende construir. Apenas sabemos, como vimos,que um valor desta grandeza corresponde um projecto de dimensão considerável. Portanto, ainda mais espanta a leviandade na sua definição.

Mais surpreendente do que o valor do projecto de desenvolvimento são os 15 milhões de euros para gastar durante quatro anos em manutenção operacional do projecto. São mais de 10 mil euros por dia durante quatro anos (365 dias por ano). Que produção tão astronómica vai ser então produzida diariamente? Não se sabe.

Na anterior lista de riscos que levam os projectos a falhar hão-de ser poucos os que não se venha a concretizar neste projecto. É isto a visão Simplex deste governo?

Artigo: Why Software Fails Why Software Fails By Robert N. Charette

First Published September 2005

We waste billions of dollars each year on entirely preventable mistakes Have you heard the one about the disappearing warehouse? One day, it vanished—not from physical view, but from the watchful eyes of a well-known retailer's automated distribution system. A software glitch had somehow erased the warehouse's existence, so that goods destined for the warehouse were rerouted elsewhere, while goods at the warehouse languished. Because the company was in financial trouble and had been shuttering other warehouses to save money, the employees at the "missing" warehouse kept quiet. For three years, nothing arrived or left. Employees were still getting their paychecks, however, because a different computer system handled the payroll. When the software glitch finally came to light, the merchandise in the warehouse was sold off, and upper management told employees to say nothing about the episode.

This story has been floating around the information technology industry for 20-some years. It's probably apocryphal, but for those of us in the business, it's entirely plausible. Why? Because episodes like this happen all the time. Last October, for instance, the giant British food retailer J Sainsbury PLC had to write off its US $526 million investment in an automated supply-chain management system. It seems that merchandise was stuck in the company's depots and warehouses and was not getting through to many of its stores. Sainsbury was forced to hire about 3000 additional clerks to stock its shelves manually [see photo, "Market Crash"]

This is only one of the latest in a long, dismal history of IT projects gone awry [see table, "Software Hall of Shame" for other notable fiascoes]. Most IT experts agree that such failures occur far more often than they should. What's more, the failures are universally unprejudiced: they happen in every country; to large companies and small; in commercial, nonprofit, and governmental organizations; and without regard to status or reputation. The business and societal costs of these failures—in terms of wasted taxpayer and shareholder dollars as well as investments that can't be made—are now well into the billions of dollars a year.

The problem only gets worse as IT grows ubiquitous. This year, organizations and governments will spend an estimated $1 trillion on IT hardware, software, and services worldwide. Of the IT projects that are initiated, from 5 to 15 percent will be abandoned before or shortly after delivery as hopelessly inadequate. Many others will arrive late and over budget or require massive reworking. Few IT projects, in other words, truly succeed.

The biggest tragedy is that software failure is for the most part predictable and avoidable. Unfortunately, most organizations don't see preventing failure as an urgent matter, even though that view risks harming the organization and maybe even destroying it. Understanding why this attitude persists is not just an academic exercise; it has tremendous implications for business and society.

SOFTWARE IS EVERYWHERE. It's what lets us get cash from an ATM, make a phone call, and drive our cars. A typical cellphone now contains 2 million lines of software code; by 2010 it will likely have 10 times as many. General Motors Corp. estimates that by then its cars will each have 100 million lines of code.

The average company spends about 4 to 5 percent of revenue on information technology, with those that are highly IT dependent—such as financial and telecommunications companies—spending more than 10 percent on it. In other words, IT is now one of the largest corporate expenses outside employee costs. Much of that money goes into hardware and software upgrades, software license fees, and so forth, but a big chunk is for new software projects meant to create a better future for the organization and its customers.

Governments, too, are big consumers of software. In 2003, the United Kingdom had more than 100 major government IT projects under way that totaled $20.3 billion. In 2004, the U.S. government cataloged 1200 civilian IT projects costing more than $60 billion, plus another $16 billion for military software.

Any one of these projects can cost over $1 billion. To take two current examples, the computer modernization effort at the U.S. Department of Veterans Affairs is projected to run $3.5 billion, while automating the health records of the UK's National Health Service is likely to cost more than $14.3 billion for development and another $50.8 billion for deployment.

Such megasoftware projects, once rare, are now much more common, as smaller IT operations are joined into "systems of systems." Air traffic control is a prime example, because it relies on connections among dozens of networks that provide communications, weather, navigation, and other data. But the trick of integration has stymied many an IT developer, to the point where academic researchers increasingly believe that computer science itself may need to be rethought in light of these massively complex systems.

When a project fails, it jeopardizes an organization's prospects. If the failure is large enough, it can steal the company's entire future. In one stellar meltdown, a poorly implemented resource planning system led FoxMeyer Drug Co., a $5 billion wholesale drug distribution company in Carrollton, Texas, to plummet into bankruptcy in 1996.

IT failure in government can imperil national security, as the FBI's Virtual Case File debacle has shown. The $170 million VCF system, a searchable database intended to allow agents to "connect the dots" and follow up on disparate pieces of intelligence, instead ended five months ago without any system's being deployed [see "Who Killed the Virtual Case File?" in this issue].

IT failures can also stunt economic growth and quality of life. Back in 1981, the U.S. Federal Aviation Administration began looking into upgrading its antiquated air-traffic-control system, but the effort to build a replacement soon became riddled with problems [see photo, "Air Jam"]. By 1994, when the agency finally gave up on the project, the predicted cost had tripled, more than $2.6 billion had been spent, and the expected delivery date had slipped by several years. Every airplane passenger who is delayed because of gridlocked skyways still feels this cancellation; the cumulative economic impact of all those delays on just the U.S. airlines (never mind the passengers) approaches $50 billion.

Worldwide, it's hard to say how many software projects fail or how much money is wasted as a result. If you define failure as the total abandonment of a project before or shortly after it is delivered, and if you accept a conservative failure rate of 5 percent, then billions of dollars are wasted each year on bad software.

For example, in 2004, the U.S. government spent $60 billion on software (not counting the embedded software in weapons systems); a 5 percent failure rate means $3 billion was probably wasted. However, after several decades as an IT consultant, I am convinced that the failure rate is 15 to 20 percent for projects that have budgets of $10 million or more. Looking at the total investment in new software projects—both government and corporate—over the last five years, I estimate that project failures have likely cost the U.S. economy at least $25 billion and maybe as much as $75 billion.

Of course, that $75 billion doesn't reflect projects that exceed their budgets—which most projects do. Nor does it reflect projects delivered late—which the majority are. It also fails to account for the opportunity costs of having to start over once a project is abandoned or the costs of bug-ridden systems that have to be repeatedly reworked.

Then, too, there's the cost of litigation from irate customers suing suppliers for poorly implemented systems. When you add up all these extra costs, the yearly tab for failed and troubled software conservatively runs somewhere from $60 billion to $70 billion in the United States alone. For that money, you could launch the space shuttle 100 times, build and deploy the entire 24-satellite Global Positioning System, and develop the Boeing 777 from scratch—and still have a few billion left over.

Why do projects fail so often »

Among the most common factors:

* Unrealistic or unarticulated project goals

* Inaccurate estimates of needed resources

* Badly defined system requirements

* Poor reporting of the project's status

* Unmanaged risks

* Poor communication among customers, developers, and users

* Use of immature technology

* Inability to handle the project's complexity

* Sloppy development practices

* Poor project management

* Stakeholder politics

* Commercial pressures

Of course, IT projects rarely fail for just one or two reasons. The FBI's VCF project suffered from many of the problems listed above. Most failures, in fact, can be traced to a combination of technical, project management, and business decisions. Each dimension interacts with the others in complicated ways that exacerbate project risks and problems and increase the likelihood of failure.

Consider a simple software chore: a purchasing system that automates the ordering, billing, and shipping of parts, so that a salesperson can input a customer's order, have it automatically checked against pricing and contract requirements, and arrange to have the parts and invoice sent to the customer from the warehouse.

The requirements for the system specify four basic steps. First, there's the sales process, which creates a bill of sale. That bill is then sent through a legal process, which reviews the contractual terms and conditions of the potential sale and approves them. Third in line is the provision process, which sends out the parts contracted for, followed by the finance process, which sends out an invoice.

Let's say that as the first process, for sales, is being written, the programmers treat every order as if it were placed in the company's main location, even though the company has branches in several states and countries. That mistake, in turn, affects how tax is calculated, what kind of contract is issued, and so on.

The sooner the omission is detected and corrected, the better. It's kind of like knitting a sweater. If you spot a missed stitch right after you make it, you can simply unravel a bit of yarn and move on. But if you don't catch the mistake until the end, you may need to unravel the whole sweater just to redo that one stitch.

If the software coders don't catch their omission until final system testing—or worse, until after the system has been rolled out—the costs incurred to correct the error will likely be many times greater than if they'd caught the mistake while they were still working on the initial sales process.

And unlike a missed stitch in a sweater, this problem is much harder to pinpoint; the programmers will see only that errors are appearing, and these might have several causes. Even after the original error is corrected, they'll need to change other calculations and documentation and then retest every step.

In fact, studies have shown that software specialists spend about 40 to 50 percent of their time on avoidable rework rather than on what they call value-added work, which is basically work that's done right the first time. Once a piece of software makes it into the field, the cost of fixing an error can be 100 times as high as it would have been during the development stage.

If errors abound, then rework can start to swamp a project, like a dinghy in a storm. What's worse, attempts to fix an error often introduce new ones. It's like you're bailing out that dinghy, but you're also creating leaks. If too many errors are produced, the cost and time needed to complete the system become so great that going on doesn't make sense.

In the simplest terms, an IT project usually fails when the rework exceeds the value-added work that's been budgeted for. This is what happened to Sydney Water Corp., the largest water provider in Australia, when it attempted to introduce an automated customer information and billing system in 2002 [see box, "Case Study #2"]. According to an investigation by the Australian Auditor General, among the factors that doomed the project were inadequate planning and specifications, which in turn led to numerous change requests and significant added costs and delays. Sydney Water aborted the project midway, after spending AU $61 million (US $33.2 million).

All of which leads us to the obvious question: why do so many errors occur?

Software project failures have a lot in common with airplane crashes. Just as pilots never intend to crash, software developers don't aim to fail. When a commercial plane crashes, investigators look at many factors, such as the weather, maintenance records, the pilot's disposition and training, and cultural factors within the airline. Similarly, we need to look at the business environment, technical management, project management, and organizational culture to get to the roots of software failures.

Chief among the business factors are competition and the need to cut costs. Increasingly, senior managers expect IT departments to do more with less and do it faster than before; they view software projects not as investments but as pure costs that must be controlled.

Political exigencies can also wreak havoc on an IT project's schedule, cost, and quality. When Denver International Airport attempted to roll out its automated baggage-handling system, state and local political leaders held the project to one unrealistic schedule after another. The failure to deliver the system on time delayed the 1995 opening of the airport (then the largest in the United States), which compounded the financial impact manyfold.

Even after the system was completed, it never worked reliably: it chewed up baggage, and the carts used to shuttle luggage around frequently derailed. Eventually, United Airlines, the airport's main tenant, sued the system contractor, and the episode became a testament to the dangers of political expediency.

A lack of upper-management support can also damn an IT undertaking. This runs the gamut from failing to allocate enough money and manpower to not clearly establishing the IT project's relationship to the organization's business. In 2000, retailer Kmart Corp., in Troy, Mich., launched a $1.4 billion IT modernization effort aimed at linking its sales, marketing, supply, and logistics systems, to better compete with rival Wal-Mart Corp., in Bentonville, Ark. Wal-Mart proved too formidable, though, and 18 months later, cash-strapped Kmart cut back on modernization, writing off the $130 million it had already invested in IT. Four months later, it declared bankruptcy; the company continues to struggle today.

Frequently, IT project managers eager to get funded resort to a form of liar's poker, overpromising what their project will do, how much it will cost, and when it will be completed. Many, if not most, software projects start off with budgets that are too small. When that happens, the developers have to make up for the shortfall somehow, typically by trying to increase productivity, reducing the scope of the effort, or taking risky shortcuts in the review and testing phases. These all increase the likelihood of error and, ultimately, failure.

A state-of-the-art travel reservation system spearheaded by a consortium of Budget Rent-A-Car, Hilton Hotels, Marriott, and AMR, the parent of American Airlines, is a case in point. In 1992, three and a half years and $165 million into the project, the group abandoned it, citing two main reasons: an overly optimistic development schedule and an underestimation of the technical difficulties involved. This was the same group that had earlier built the hugely successful Sabre reservation system, proving that past performance is no guarantee of future results.

Inicialmente publicado a 21-05-2009

Nota: com este texto termina a série de republicações.

To create the mapping tool, Google developed an algorithm that uses several inputs — including designated bike lanes or trails, topography and traffic signals — to determine the best route for riding. The map sends you around, not over, hills. But if you really want to tackle that

To create the mapping tool, Google developed an algorithm that uses several inputs — including designated bike lanes or trails, topography and traffic signals — to determine the best route for riding. The map sends you around, not over, hills. But if you really want to tackle that